WARNING: This website is obsolete! Please follow this link to get to the new Albert@Home website!

Project server code update |

Message boards :

News :

Project server code update

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · 7 · 8 . . . 17 · Next

| Author | Message |

|---|---|

|

Claggy Send message Joined: 29 Dec 06 Posts: 78 Credit: 4,040,969 RAC: 0 |

Attached a new host to Albert, looking through the logs i keep getting the following download error: 14-Jun-2014 06:06:32 [Albert@Home] Started download of eah_slide_05.png 14-Jun-2014 06:06:32 [Albert@Home] Started download of eah_slide_07.png 14-Jun-2014 06:06:32 [Albert@Home] Started download of eah_slide_08.png 14-Jun-2014 06:06:33 [Albert@Home] Finished download of eah_slide_07.png 14-Jun-2014 06:06:33 [Albert@Home] Started download of EatH_mastercat_1344952579.txt 14-Jun-2014 06:06:34 [Albert@Home] Finished download of eah_slide_05.png 14-Jun-2014 06:06:34 [Albert@Home] Finished download of eah_slide_08.png 14-Jun-2014 06:06:34 [Albert@Home] Giving up on download of EatH_mastercat_1344952579.txt: permanent HTTP error On this new host (as well as on my HD7770) i'm still getting the very short estimates for Perseus Arm Survey GPU tasks, so i've added two zero's to the rsc_fpops values so they'll complete. Computer 11441 Claggy |

|

Snow Crash Send message Joined: 11 Aug 13 Posts: 10 Credit: 5,011,603 RAC: 0 |

updated details [u]OS BOINC CPU GPU Utilization Factor[/u] Win7 7.2.42 980x 670 0.50 Win7 7.2.42 920 7950 0.33 [u]Win7 7.2.42 4670k 7850 0.50 [/u] |

|

Richard Haselgrove Send message Joined: 10 Dec 05 Posts: 450 Credit: 5,409,572 RAC: 0 |

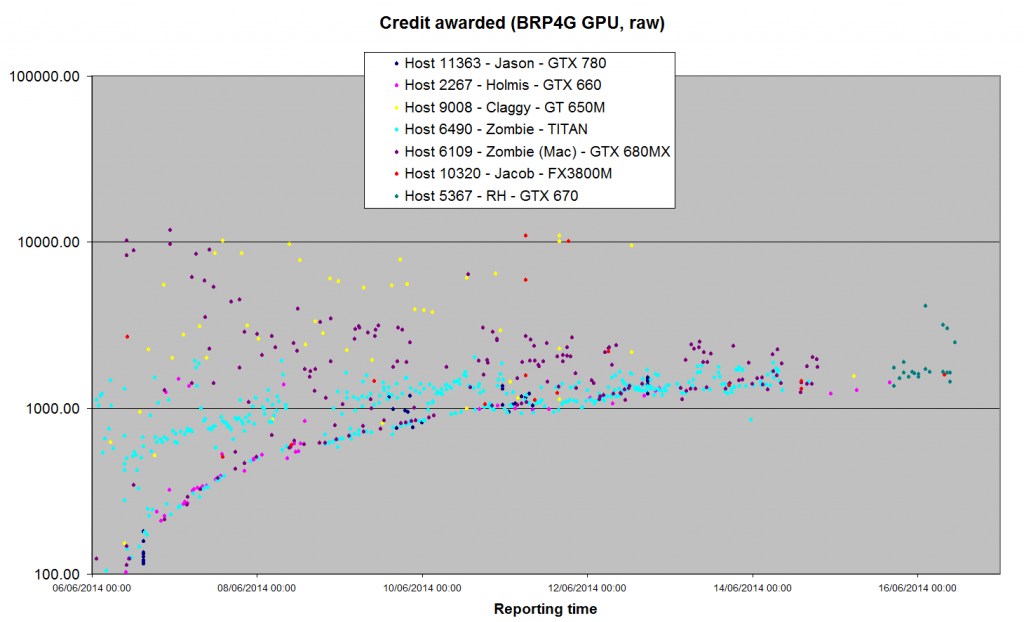

The GPU plot continues to thicken. Some of the machines I picked to monitor seem to have dropped out of the running, so I added one of my own yesterday.  It seems to have started in line with current trends, but like the others, there seem to be distinct 'upper' and 'lower' credit groupings. Why would that be? I've also noticed something that doesn't show with this style of plotting against reporting time: when I've been infilling late validations, the credit awards - in line with the trendlines - have been much higher than their contemporaries got, and the double population is visible there too. For example, Claggy's WU 606387 from 5 June was awarded over 7K overnight. That should show up in the changing averages: Jason Holmis Claggy Zombie ZombieM Jacob RH Host: 11363 2267 9008 6490 6109 10320 5367 GTX 780 GTX 660 GT 650M TITAN 680MX FX3800M GTX 670 Credit for BRP4G, GPU Maximum 1584.75 1495.38 10951.9 2031.40 11847.5 10951.9 4137.85 Minimum 115.82 88.84 153.90 91.50 94.88 508.73 1355.49 Average 813.32 688.57 4120.83 1074.52 2010.65 2743.09 1917.71 Median 973.50 539.19 3037.38 1122.70 1591.80 1456.42 1641.43 Std Dev 523.51 428.48 3074.79 393.82 1823.08 3177.59 703.78 nSamples 36 52 48 387 189 17 21 (Why does the new web code double-space [ pre ]?) Edit - corrected copy/paste error on application type. |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

Interesting. Of course credit is at time of validation not reporting against a moving scale, yadda yadda. So, late validations earn higher. the wingmate is then either a slow host or has a larger cache. the first is in line with expectations iirc, the second would be puzzling. then again if you are observig a chaotic system it's hard to know what may or may not happen anyway... Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

|

jason_gee Send message Joined: 4 Jun 14 Posts: 109 Credit: 1,043,639 RAC: 0 |

Interesting. Yes, the two mixed and partially overlapping time domains for the credit mechanism (issue time and validation time) both oscillate & interact. Those two are enough combined to setup a resonance by themselves ( additive & subtractive), but then with the natural variation in the real-world processing thrown in, you introduce another one or more 'bodies', making the system an unpredictable n-body problem. For our purposes the dominant overlap is in those time domains, so sufficient damping to each control point to place them in separate time domains should be enough to break the chaotic behaviour. e.g. global pfc_scale -> minimise the coarse scaling error and vary smoothly over some 100's to 1000's of task validations host (app version) scale -> vary smoothly over some 10's of validations, small enough to respond to hardware or app change in 'reasonable' time (number of tasks) client side (currently either disabled or broken by project DCF not beign per application) - vary smoothly over a few to 10 tasks Once separated in time, then this coarse->fine->finer tuning is difficult to destabilise, even with fairly sudden aggressive change at any point. On two occasions I have been asked, "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" ... I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question. - C Babbage |

|

Richard Haselgrove Send message Joined: 10 Dec 05 Posts: 450 Credit: 5,409,572 RAC: 0 |

The few wingmates I've spot-checked against high credit awards (my own and others) don't seem to be outside the normal population. They too show bi-modal distributions, and high credits don't seem to be correlated with varying runtimes. I'm trying to run the GTX 670 as stable as possible, and runtimes are very steady. Which leaves the moving average theory. Two more tasks have both reported and validated since I posted the graph: they both got over 2,000 credits, which suggests the average is still moving upwards. |

![View the profile of zombie67 [MM] Profile](https://albert.phys.uwm.edu/img/head_20.png) zombie67 [MM] zombie67 [MM]Send message Joined: 10 Oct 06 Posts: 130 Credit: 30,924,459 RAC: 0 |

Just FYI, I had to take my two CUDA machines off albert for a while. I need to help a team mate at another project. I will be back. Dublin, California Team: SETI.USA  |

|

jason_gee Send message Joined: 4 Jun 14 Posts: 109 Credit: 1,043,639 RAC: 0 |

The few wingmates I've spot-checked against high credit awards (my own and others) don't seem to be outside the normal population. They too show bi-modal distributions, and high credits don't seem to be correlated with varying runtimes. I'm trying to run the GTX 670 as stable as possible, and runtimes are very steady. Yes the beauty of testing here has been the *relatively* consistent runtimes on a given host/gpu, which tends to demonstrate that much of the instability is artificially induced (as opposed to a direct function of the noisy elapsed times). The upward trend would appear to be a drift instability, and bets are off as to whether it continues indefinitely, levels off, or (my guess) starts a downward cycle after some peak. The bi-modal characteristic appears to be that oscillation between saturation and cuttoff, most likely turn-around time related. That'd be predominantly proportional error ('overshoot'). [post scaling by the average of the validated claims isntt going to help that, though it was a valiant attempt over the original choice of taking the minimum claim] On two occasions I have been asked, "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" ... I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question. - C Babbage |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

Heisenberg? My Maths teacher? Chaos theory? Zen? Superstitious pidgeons? In a chaotic system, a trend observed may be genuine or coincidental. And in any case, we shouldn't be putting too much effort into observing the scintillations of a soapbubble we intend to burst. Of course the colourpattern is fascinating indeed... Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

|

jason_gee Send message Joined: 4 Jun 14 Posts: 109 Credit: 1,043,639 RAC: 0 |

Heisenberg? My Maths teacher? Chaos theory? Zen? Superstitious pidgeons? Good. what I'm doing at the moment is drafting goals for the first patch. A lot of that will be involved with confirming/rejecting the particular suspects for the purposes of isolation. I do think the observations, or 'getting a feel' for the character of what it's doing, is important at the moment... and the patterns are pretty to look at... but you're right, I'd like to have the full first pass patch ready for trials by the time Bernd's back on duty... Outline for pass 1: Objectives and scope Resources Procedure Results Discussion of Results Conclusions and further work (plan for pass 2) On two occasions I have been asked, "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" ... I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question. - C Babbage |

|

Richard Haselgrove Send message Joined: 10 Dec 05 Posts: 450 Credit: 5,409,572 RAC: 0 |

I've been asked to pull out stats on runtime and turnround, in case they explain anything - I don't think they do. Jason Holmis Claggy Zombie ZombieM Jacob RH Host: 11363 2267 9008 6490 6109 10320 5367 GTX 780 GTX 660 GT 650M TITAN 680MX FX3800M GTX 670 Credit for BRP4G, GPU Maximum 1584.75 1495.38 10952.0 2031.40 11847.5 10952.0 4137.85 Minimum 115.82 88.84 153.90 91.50 94.88 508.73 1355.49 Average 813.32 688.57 4120.83 1074.52 2010.65 2743.09 1890.82 Median 973.50 539.19 3037.38 1122.70 1591.80 1456.42 1644.33 Std Dev 523.51 428.48 3074.79 393.82 1823.08 3177.59 614.08 nSamples 36 52 48 387 189 17 28 Runtime (seconds) Maximum 4401.98 5088.99 11295.0 5383.71 23977.4 11774.3 4138.67 Minimum 3259.10 3294.83 8136.47 1908.26 1512.16 11515.5 4061.45 Average 3668.57 4483.11 8910.76 4194.81 4216.60 11623.3 4102.53 Median 3603.24 4608.05 8837.27 4228.18 4183.74 11603.6 4109.83 Std Dev 314.13 506.27 554.34 555.88 1981.31 70.73 25.21 Turnround (days) Maximum 2.11 3.91 1.70 3.44 2.94 5.57 0.72 Minimum 0.15 0.07 0.14 0.24 1.52 0.18 0.15 Average 1.14 1.97 0.69 2.10 2.32 1.64 0.44 Median 0.73 1.90 0.74 2.00 2.42 0.88 0.48 Std Dev 0.79 0.98 0.35 0.57 0.32 1.68 0.17 Zombie's Mac had just two of those extra-long runtimes: WU 612084, 1,328.76 cr WU 612045, 6,419.08 cr Edit - I updated my own column since this morning, the others are unchanged. |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

hmm. maybe. would need to ball eye the code again to get more certainity. smaple size is a bit small too - maybe in a few days. Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

Holmis HolmisSend message Joined: 4 Jan 05 Posts: 104 Credit: 2,104,736 RAC: 0 |

I'll add that my GTX660Ti is running 2 task at a time mixing BRP4G and BRP5 from Albert and BRP5 from Einstein. The Intel HD4000 is running single tasks. Here's an updated Excel file with data and plots from host 2267 and the following searches: BRP4X64, BRP4G, S6CasA, BRP5 (iGPU) and BRP5 (Nvidia GPU). |

|

jason_gee Send message Joined: 4 Jun 14 Posts: 109 Credit: 1,043,639 RAC: 0 |

... in case they explain anything - I don't think they do. Well explore any possibility for sure. From an engineering perspective, understanding the spoon doing the stirring is probably a good idea [though asking it what it's doing might not reveal much :) ]. Probably we won't try getting rid of the spoon, but instead what it's mixing... fine granulated sugar and some honey, instead of a solid hunk of extra thick molasses. On two occasions I have been asked, "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" ... I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question. - C Babbage |

Holmis HolmisSend message Joined: 4 Jan 05 Posts: 104 Credit: 2,104,736 RAC: 0 |

Nvidia GPU: A follow up on my post about initial estimates, the 11th BRP5 task has now been validated and the APR has been calculated to 30.16 GFlops when running 2 tasks at a time. So the initial estimate was that the card was a whooping 412,9 times faster than actual! =O |

|

jason_gee Send message Joined: 4 Jun 14 Posts: 109 Credit: 1,043,639 RAC: 0 |

Nvidia GPU: Yeah, for my 780 initial guesstimates were 3 seconds, and came in about an hour, so about a thousand-fold discrepancy. Room for optimisation in the application doesn't account for the whole discrepancy (of course :) ). looks like there'll be some digging along these lines to do. Just some factors to look at with new GPU apps & hosts might include: - CPU app underclaim becomes global scaling reference, we know these leave out SIMD (sse->avx) on raw claims, so look more efficient than they are, because certain numbers come out 'impossible' if the logic were right (e.g. pfc_scale < 1) [perhaps up to 10x discrepancy) - GPU overestimate of performance (attempting to be generous) [could be in the ballpark of another 10x discrepancy] - Actual utilisation variation ( e.g. from people using their machines, multiple tasks per GPU...more...) [ combined perhaps up to another 10x] - room for optimisation of the application(s), or inherent serial limitations [maybe from 1x to very big number discrepancy, let's go with another 10x] So all-told with guesstimates probably 1000x discrepancy is easily plausible, just among known factors, and there may be more... That's completely ignoring the possibility of hard-logic flaws, which are entirely possible, even likely. That all will probably be dependant on some of the customisations and special situations here at Albert, stil lto be examined in context. Thes include if & how some apps are 'pure GPU' or otherwise packages of multiple CPU-like tasks, how they are wired in and scaled. On the surface it looks like there may well be multiple of these things in play. These all seem connected, so I suspect we'll need to make patch one multi-step, so we make switch in one small change at a time, and watch for weird interactions. For example, Fix CPU side coarse scale error then watch GPU estimate &/or credit blow-out in response... LoL On two occasions I have been asked, "Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?" ... I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question. - C Babbage |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

... in case they explain anything - I don't think they do. 'There is no spoon' ;) Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

Nvidia GPU: Must be picking up extra factors from somewhere - might be due to the fact that (as Eric stated) there is no damping of GPu by CPU figures. But there is one or more massive scaling errors lurking around. Dang. That code is a nightmare to walk. might not all be flops scaling error of course, need to cast an eye over the rsc_fpops_est calculation as well... Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

|

Richard Haselgrove Send message Joined: 10 Dec 05 Posts: 450 Credit: 5,409,572 RAC: 0 |

might not all be flops scaling error of course, need to cast an eye over the rsc_fpops_est calculation as well... Since I'm not running anonymous platform, rsc_fpops_est isn't being scaled. I'm seeing fpops_est 280,000,000,000,000 FLOPs 69,293,632,242 APR 69.29 GFLOPS Order-of-magnitude sanity check: 280 e12 fpops 70 e9 flops/sec --> 4 e3 runtime estimate. Correct on both server and client, and sane. Edit: identical card in same host has APR of 156.36 at GPUGrid. I'm running one task at a time there, and two at a time here, so I'd say that the speed estimates agree. IOW, runtime estimates are OK once the initial gross mis-estimation has been overcome: this bit of the quest is for credit purposes only. |

|

Eyrie Send message Joined: 20 Feb 14 Posts: 47 Credit: 2,410 RAC: 0 |

Ta. Looks like fpops estimate isn't too shabby then. But you need to exclude that contribution. Queen of Aliasses, wielder of the SETI rolling pin, Mistress of the red shoes, Guardian of the orange tree, Slayer of very small dragons. |

This material is based upon work supported by the National Science Foundation (NSF) under Grant PHY-0555655 and by the Max Planck Gesellschaft (MPG). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the investigators and do not necessarily reflect the views of the NSF or the MPG.

Copyright © 2024 Bruce Allen for the LIGO Scientific Collaboration